Member-only story

Claude 3’s Vision Capabilities are Unbelievable

Unlock the power of the Claude 3 models to convert images into actionable structured outputs seamlessly.

Up until now OpenAI models were best in class for generating structured JSON outputs and function calling. But very recently Anthropic released their Claude 3 family of models. The models in this family are very good at reasoning, coding, and structured data generation.

As these models can generate correct structured JSON output and on top of that as they’ve good reasoning skills we can use them for function calling use cases. Recently, I wrote a small Python package — claudetools — that helps with function calling using the Claude 3 family of models.

You can visit the following blog to learn more about Claudetools.

P.S.: You can directly use Claudetools as a drop-in replacement for function calling with OpenAI model with some very minor updates.

Vision Capabilities

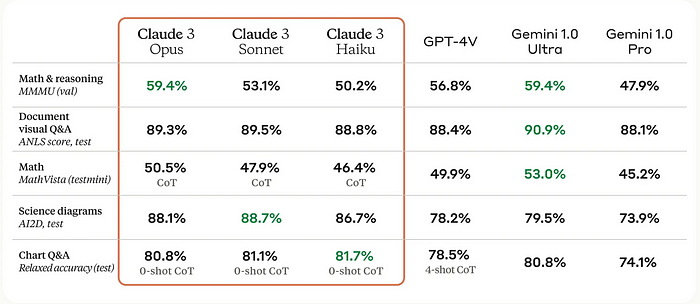

All the models in the Claude 3 family have vision capabilities. This opens up exciting multimodal interaction possibilities. The vision capabilities are on par with GPT-4-Vision model and even beats GPT-4-Vision on some benchmarks as shown in the following table.

Because of these models sophisticated vision capabilities they can process a wide variety of visual formats, including photos, charts, graphs, and technical diagrams.

As mentioned above, all the models in the Claude 3 family come with vision capabilities out of the box and don’t require any different model version, we can directly use our Claudetools package for function calling with image input.